Composable protocols powering beautiful purpose-built apps

How to aggregate on-chain and off-chain activity into a unified experience

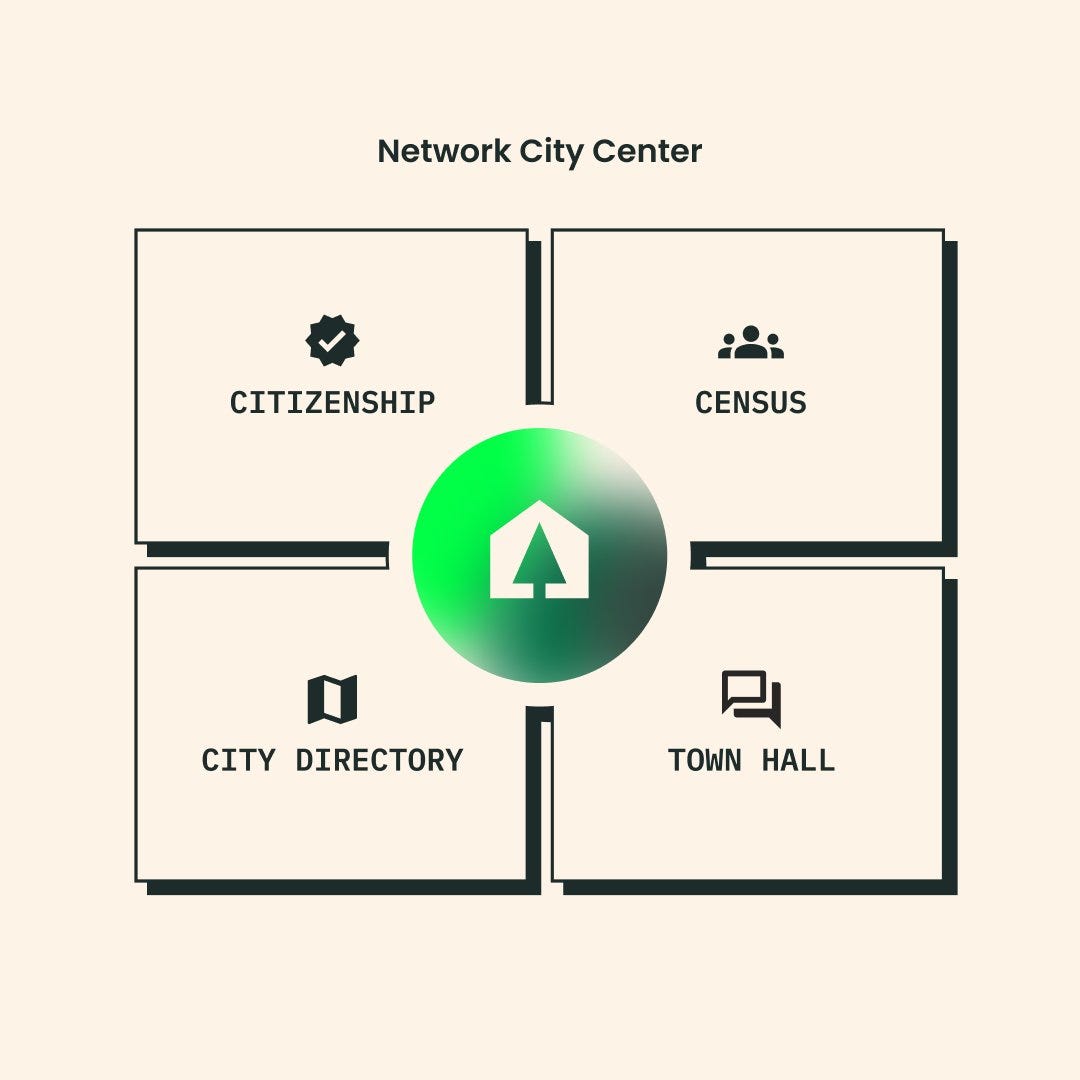

Cabin recently launched their network city, which unites their community, subscription memberships and physical co-living neighborhoods into a powerful, purpose-built experience.

These flagship products are powered by composable protocols and assembled into a single, coherent app built just for Cabin’s community.

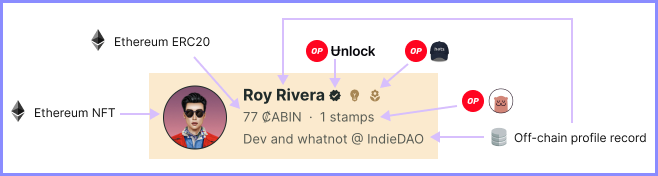

If you look closely at the Cabin Census, you will notice some cool things about each profile.

Working from left to right, you can see multiple protocols across multiple chains all presented in a single profile view:

Avatar image is pulled from an NFT on Ethereum

₡ABIN token balance is pulled from an ERC20 contract on Ethereum

Profile name and description are pulled from an off-chain database

Citizenship checkmark is pulled from Unlock Protocol

Role icons are pulled from Hats Protocol

Stamps are pulled from Otterspace

It’s a protocol smorgasbord and we love it 😍

How to aggregate multiple protocols

The challenge with operating across multiple protocols and chains is visualizing all of this information in a coherent way. There unfortunately isn’t an obvious method to make this visualization possible so we will explore what the options are and identify the trade-offs with each.

First, let’s narrow down what problem that needs to be solved.

Ultimately, we are trying to store profile data to achieve the following:

Display both on-chain and off-chain profile attributes in a single view

Display a list of profiles (many data points across many profiles)

Sort and filter profiles by on-chain attributes (e.g. token balance)

Sort and filter profiles by off-chain attributes (e.g. profile name)

The user experience in the end is a directory of profiles with search, filter and sort options.

As we explore the solutions below, we may find that some solutions solve one or more of these challenges but not all of them.

So how does one query this data so that it can be displayed like this? Well, there a few options!

Events queries (powered by filters)

Analytics tools

The Graph

Do It Yourself

Query aggregate data with events queries

🤔 💭 But can’t we just query the blockchain directly?

In some cases, it makes sense to query Events to read data from a smart contract.

For example, the code below uses the ethers library to read token transfers from a specific address.

filterFrom = erc20.filters.Transfer(signer.address);

// {

// address: '0x70ff5c5B1Ad0533eAA5489e0D5Ea01485d530674',

// topics: [

// '0xddf252ad1be2c89b69c2b068fc378daa952ba7f163c4a11628f55a4df523b3ef',

// '0x00000000000000000000000046e0726ef145d92dea66d38797cf51901701926e'

// ]

// }

// Search for transfers *from* me in the last 10 blocks

logsFrom = await erc20.queryFilter(filterFrom, -10, "latest");These queries work well for querying recent events or events within a known block range. The last two parameters of queryFilter limit the blocks that need to be interrogated. With millions of blocks out there, attempting to read events from all of them would result in a timeout.

This approach becomes even more unwieldy when you need to read from multiple contracts.

For this data aggregation problem, the data space is too broad to achieve with events queries alone. You would need to initiate hundreds if not more queries if you need to query all events of a certain kind across multiple protocols. This is unrealistic to do from a web application client.

Query aggregate data with analytics tools

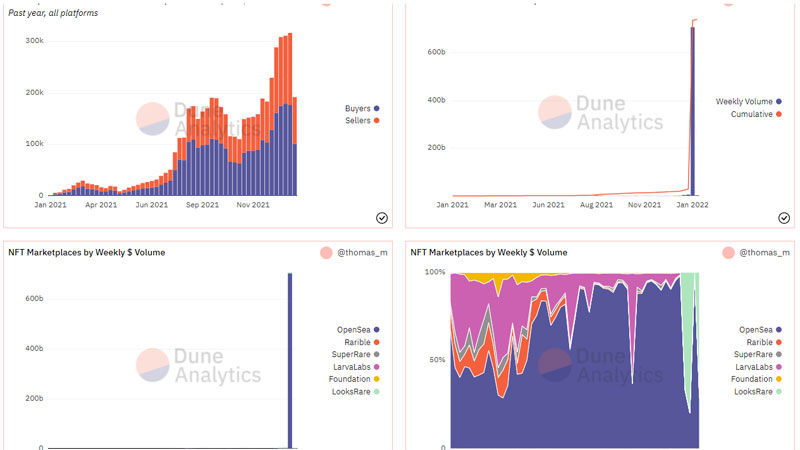

If you simply need to read historical data, there are many analytics tools out there that read each block as its added to the blockchain and makes it available through charts, tables and dashboards.

Dune is a good example that works quite well for many analytics use cases.

Dune also has an API that might work for you if you want to build your own screens against on-chain data.

But what if you want to view both on-chain and off-chain data alongside each other? This is where chain analytics tools fall short as they are only able to read and index on-chain data. You cannot write your own data to them.

Query aggregate data with The Graph

The Graph hosts subgraphs, which are open APIs that organize and serve blockchain data to applications.

Subgraphs are especially helpful in cases where smart contract storage is not structured to support application use cases.

For example, if you wanted to programmatically determine which addresses hold an ERC-20 token like ₡ABIN, you will find that the contract’s read functions do not make this easy. Adding additional storage to support such use cases increases the gas costs for operating on the contract and most smart contracts will avoid this undue cost burden on users.

This is where subgraphs come into play. Subgraphs can monitor events on one or more smart contracts and update their off-chain database accordingly.

For example, each ERC-20 transfer event can be handled like so:

import { Transfer as TransferEvent } from '../generated/ERC20/ERC20'

import { Transfer } from '../generated/schema'

export function handleTransfer(event: TransferEvent): void {

let id = event.transaction.hash

let transfer = new Transfer(id)

transfer.from = event.params.from

transfer.to = event.params.to

transfer.amount = event.params.amount

transfer.save()

}The above example stores all transfers, but you can define your own entity types and modify them as necessary for your use case. You could create a new User entity each time you detect a transfer to a new to address and then later write a GraphQL query that fetches all User entities that have ever held this token. The Graph will inspect the chain, block by block, until your subgraph is fully synchronized and then will continue to evaluate new blocks in perpetuity.

Another really nice part of The Graph is that it manages chain reorganization for you.

Chain reorganization occurs when a transaction is confirmed by one or more nodes but then subsequently drops those transactions. This can be very complex to deal with depending on when you attempt to handle the confirmed transactions. If your off-chain database mutates data in response to a transaction that is later dropped, you now need to clean this up on your own somehow. With The Graph, you don’t need to concern yourself with this complexity.

There are a few limitations to be aware of when it comes to The Graph.

First, you are once again limited to on-chain data since you can only modify entities in response to on-chain events. There is no way to directly mutate entities from an external system.

Second, each subgraph can only store data for a single chain. If your operation spans across multiple chains like Ethereum plus one or more L2s, you are out of luck. You will need a separate subgraph for each chain and would need to aggregate your data elsewhere.

Query aggregate data in a purpose-built off-chain database

The last option we will consider is a DIY approach to indexing and aggregating data.

With this solution, you can choose any type of database to store both off-chain and on-chain data.

Off-chain data will be managed however you see fit for your application. Some of it will be written directly by your users, some of it may come from external systems, etc.

On-chain data will need custom synchronization tasks that pull data from on-chain smart contracts and push them into your off-chain database. There are a few ways to accomplish this synchronization but here we will highlight a set of scheduled jobs that perform this synchronization periodically.

If you are going to build your own indexing solution, keep these important items in mind:

Transactional writes

Error handling and retries

Concurrency

Idempotent synchronization

Record de-duplication

Chain reorganization tolerance

The off-chain database

In our case, we chose to use Fauna as an off-chain database. It’s serverless scale-as-you-go model is great for getting new projects off the ground and it also supports some of the important pieces above including concurrency, transactional writes and idempotent synchronization. Many of the code snippets below use Fauna’s query language (FQL) but the same principles can apply to other databases like Postgres.

Synchronization tasks

The concept behind a synchronization task is similar to how events are handled by the subgraphs we covered earlier. Each time the task is run, a new set of blocks is evaluated and your data is updated accordingly.

This example handles transfer events on an ERC-20 token contract.

async function _syncHandler(state: SyncAttemptState): Promise<void> {

// 1. Query the contract for transfers within the block range

const { startBlock, endBlock, ref } = state

const cabinTokenContract = CabinToken__factory.connect(

cabinTokenConfig.contractAddress,

state.provider

)

const transferFilter = cabinTokenContract.filters.Transfer()

const transfers = await cabinTokenContract.queryFilter(

transferFilter,

startBlock.toNumber(),

endBlock.toNumber()

)

// 2. Determine new balances for affected addresses

const newBalancesByAddress = /* Hidden for brevity */

const newBalances = Object.entries(newBalancesByAddress).map(

([address, balance]) => ({

address,

balance: balance.toString(),

})

)

// 3. Save changed balances to the database

await syncAccountBalances(ref, newBalances)

}Safe Database Writes

When pulling data from external sources, there are all kinds of weird edge cases to consider. Here are a few examples of unexpected things that could happen.

External errors: The API you are pulling from just might not be available. This could be a blockchain RPC, a subgraph, or any other external system.

Duplicate events: You may for some reason encounter the same type of event twice. For example, maybe the same token mint event is fed to your system more than one time. You don’t want to this to create duplicate records in your database.

Concurrent requests: Cron jobs can overlap or other actions may trigger a sync request. In these cases, you want to prevent the task from being evaluated twice, thus resulting in inaccurate data modifications.

There are a few things you can do to safely handle the scenarios above.

First, it helps to ensure that block synchronization is handled sequentially. For each type of data you are synchronizing, you can perform a two-phased commit keyed off of the task type and the block range.

The snippet below writes a pending sync attempt to the database. It includes the block range and a key (e.g. “erc20_token_sync_attempt”). Subsequent requests will check for a record with matching key and block range before beginning a new attempt.

// Create a new sync attempt for the next N blocks in pending state

return await createSyncAttempt(

key,

'Pending',

startBlock.toString(),

endBlock.toString()

)Errors of any kind flip the status to “Failed” so a subsequent attempt performs a retry.

try {

const result = await handler(state)

} catch (error) {

await updateSyncAttemptStatus(syncAttempt.ref, 'Failed')

}Data modifications confirm the sync attempt is not already completed within the same transaction as the modification.

It’s okay if you don’t fully understand the FQL syntax below. The important thing to keep in mind is that the status check occurs within the database transaction. This is how you prevent concurrent tasks from interfering with one another and maintain data integrity.

// This FQL rolls a DB transaction back if a dupe is detected

export const CompleteSyncAttempt = (syncAttemptRef: Expr) => {

return q.Let(

{

syncAttempt: q.Get(syncAttemptRef),

syncAttemptStatus: q.Select(['data', 'status'], q.Var('syncAttempt')),

},

q.If(

q.Equals(q.Var('syncAttemptStatus'), 'Successful'),

// If the sync attempt is already successful, revert the transaction - this is a dupe

q.Abort(

'Sync attempt already completed. Aborting transaction since this is a duplicate.'

),

q.Update(syncAttemptRef, {

data: {

status: 'Successful',

},

})

)

)

}Idempotence and De-duplication

Not only do we want to prevent a sync task from running more than once, but we also want to handle duplicate domain events. An example of a domain event is a new NFT being minted. A weird scenario that could happen is the NFT is minted twice, perhaps because the user can mint more than one or they go through a mint-burn-mint sequence.

In any case, if you have an activity feed that should create a new post each time a user mints, you will want this to appear as just a single post and not create duplicates.

A common pattern to handle this is to perform an uspert on the record. With an upsert, if a matching record exists, the record is updated, otherwise it is inserted.

The FQL below achieves such an upsert for an activity feed. An example key could be an NFT token ID. If an activity is already detected for a matching token ID, then a new record is not created.

This approach is also idempotent. If you needed to run a synchronization more than once, perhaps to fix a bug and replay a series of sync tasks, records will be updated instead of created 👌

export const UpsertActivity = (

profile: Expr,

input: UpsertActivityInput,

) => {

return q.If(

q.IsNull(profile),

null,

q.Let(

{

existingActivitySet: q.Match(q.Index('unique_Activity_key'), input.key),

},

q.Let(

{

data: q.Merge(input, {

profile: SelectRef(profile),

timestamp: ToTimestamp(input.timestamp),

}),

},

q.If(

q.IsEmpty(q.Var('existingActivitySet')),

// Activity does not exist, create it

q.Create(q.Collection('Activity'), {

data: q.Var('data'),

}),

q.Update(RefFromSet(q.Var('existingActivitySet')), {

data: q.Var('data'),

})

)

)

)

)

}Chain reorganization tolerance

One of the most annoying features of the blockchain is when you believe a transaction completes only to see it get squashed by another set of validations.

Imagine this sequence of events:

A user mints an NFT

Your backend runs a synchronization task and records the NFT mint in your database

A chain reorg occurs and the transaction that included the NFT mint is dropped

You now have a record in your database that doesn’t reflect reality

There are a number of ways this can be corrected and as stated before, some services like The Graph handle these reorgs for you. But with your own synchronization system, the absolute easiest thing you can do is set a safe block threshold.

With a safe block threshold, you only evaluate blocks that are within the safe threshold. The safe threshold operates under the assumption that a block is extremely unlikely to be dropped after a certain number of subsequent blocks have been confirmed after it. For example, if your threshold is 30 and the latest known block number is 100, then you only evaluate up through block 70. Block 100 would not be evaluated until the latest known block reaches 130.

The code below determines a block range that factors this safe threshold in.

// Find the latest safe block number

const maxSafeBlock = latestBlockNumber - SAFE_BLOCK_THRESHOLD

let startBlock

if (latestSuccessfulAttempt) {

// If we're already up to date, there is nothing to attempt

if (latestSuccessfulAttempt.data.endBlock === maxSafeBlock.toString() {

return null

}

startBlock = BigNumber.from(latestSuccessfulAttempt.data.endBlock).add(1)

} else {

startBlock = initialBlock

}

// Find the end block (don't exceed the max safe block)

const endBlock = startBlock.add(blockCount).gt(maxSafeBlock)

? BigNumber.from(maxSafeBlock)

: startBlock.add(blockCount)The big trade-off when you establish a safe block threshold is the delay between something occurring on-chain and making its way into your system. A 30 block threshold would create a delay of about 6 minutes on Ethereum (~5 blocks/minute). This tolerance is fine for most use cases but if you need something more real-time this approach would not be a great option.

Wrapping up

As the blockchain ecosystem expands to more chains and more protocols, visualizing all of this awesome activity continues to be a challenge. We hope this guide offers a few options and their trade-offs to help you find the right solution.

We’re here to help! Reach out to Indie if you want to collaborate on a project ❤️